Table of content:

- The World AI Cannes Festival: Innovation, Strategic Partnerships and the Future of Humanity in the Age of AI

- Navigating the Global Wave of AI Regulation

- Ethical AI and Compliance: WordLift’s Proactive Approach

- Contextualising Corporate Strategies: Navigating Open Issues in AI Regulation within the Larger Landscape

The World AI Cannes Festival: Innovation, Strategic Partnerships and the Future of Humanity in the Age of AI

The World AI Cannes Festival (WAICF) stands as a premier event in Europe, attracting decision-makers, companies, and innovators at the forefront of developing groundbreaking AI strategies and applications. With an impressive attendance of 16,000 individuals, featuring 300 international speakers and 230 exhibitors, the festival transforms Cannes into the European hub of cutting-edge technologies, momentarily shifting focus from its renowned status as a global cinema stage.

This year marked WordLift’s inaugural participation in the festival, where we capitalised on the diverse opportunities the event offered. We were exposed to a myriad of disruptive applications such as the palm-based identity solution showcased by Amazon to streamlining payment and buying experience for consumers. Furthermore, we observed the emergence of strategic partnerships among key market players, exemplified by the collaboration between AMD and Hugging Face. As Julian Simon, Chief Evangelist of Hugging Face, aptly stated, “There is a de facto monopoly on computers today, and the market is hungry for supply.”

Engaging in thought-provoking discussions surrounding the future intersections of humanity and AI was a highlight of the event. One of the most captivating keynotes was delivered by Yann LeCun, the chief AI scientist of Meta and a pioneer in Deep Learning. LeCun discussed the limitations of Large Language Models (LLMs), emphasising that their training is predominantly based on language, which constitutes only a fraction of human knowledge derived mostly from experience. One of his slides provocatively titled “Auto-regressive LLMs suck” underscored his message that while machines will eventually surpass human intelligence, current models are far from achieving this feat. LeCun also shared insights into his latest work aimed at bridging this gap.

Navigating the Global Wave of AI Regulation

Allowing the more technically equipped participants to delve into discussions about the technical advancements showcased in Cannes, I will instead focus on a topic that, while less glamorous, holds great relevance: the anticipated impact of forthcoming AI regulation on innovation and players in the digital markets. This theme was prominent during the festival, with several talks dedicated to it, and many discussions touching upon related aspects of this trend.

Although in Europe the conversation predominantly revolves around the finalisation of the AI Act (with its final text expected in April 2024, following the EU Parliament’s vote), it’s essential to recognize that this is now a global trend. Pam Dixon, executive director of the World Privacy Forum, presented compelling data illustrating the exponential rise in governmental activities concerning AI regulation, highlighting the considerable variations in responses across jurisdictions. While some initially speculated that AI regulation might follow a path similar to GDPR, establishing a quasi-global standard in data protection to which most entities would adapt, it’s becoming evident that this won’t be the case. The OECD AI Observatory, for instance, is compiling a database of national AI policy strategies and initiatives, currently documenting over 1,000 policy initiatives from 70 countries worldwide.

One audience question particularly resonated with me: ‘If you are a small company operating in this evolving ecosystem, facing the challenges of this emerging regulatory landscape, where should you begin?’ To be honest, there’s no definitive answer to this question at the moment. Although the AI Act has yet to become EU law, and its effective enforcement timelines are relatively lengthy, WordLift, like many others in this industry, is already fielding numerous requests from customers seeking reassurance on our compliance strategies. Luckly, WordLift has been committed to fostering a responsible approach to innovation since its establishment.

Ethical AI and Compliance: WordLift’s Proactive Approach

For those working at the intersection of AI and search engine optimization (SEO), ethical AI practices are paramount concerns. WordLift has conscientiously crafted an approach to AI aimed at empowering content creators and marketers while upholding fundamental human values and rights. Previous contributions on this blog have covered various aspects of ethical AI, including legal considerations, content creation, and the use of AI in SEO for enterprise settings, explaining in details how WordLift translates the concept of trustworthy AI into company practices, ensuring that its AI-powered tools and services are ethical, fair, and aligned with the best interests of users and society at large.

While the AI Act mandates that only high-risk AI system providers undertake an impact assessment to identify the risks associated with their initiatives and apply suitable risk management strategies, at WordLift we have proactively seized this opportunity to enhance communication with stakeholders, developing a framework articulating our company’s principles across four main pillars:

- Embracing a ‘Human-in-the-loop’ approach to combine AI-based automation with human oversight, in order to guarantee content excellence.

- Ensuring Data Protection & IP through robust processes safeguarding client data, maintaining confidentiality, and upholding intellectual property rights.

- Prioritising Security with a focus on safeguarding against potential vulnerabilities in our generative AI services architecture.

- Promoting Economic and Environmental Sustainability by committing to open-source technologies and employing small-scale AI models to minimise our environmental footprint.

We are currently in the process of documenting each pillar in terms of the specific choices and workflows adopted.

Contextualising Corporate Strategies: Navigating Open Issues in AI Regulation within the Larger Landscape

However, it’s essential to contextualise SMEs and startups compliance policies in the bigger picture, where mergers and partnerships between major players providing critical upstream inputs (such as cloud infrastructure and foundation models) and leading AI startups have become a trend.

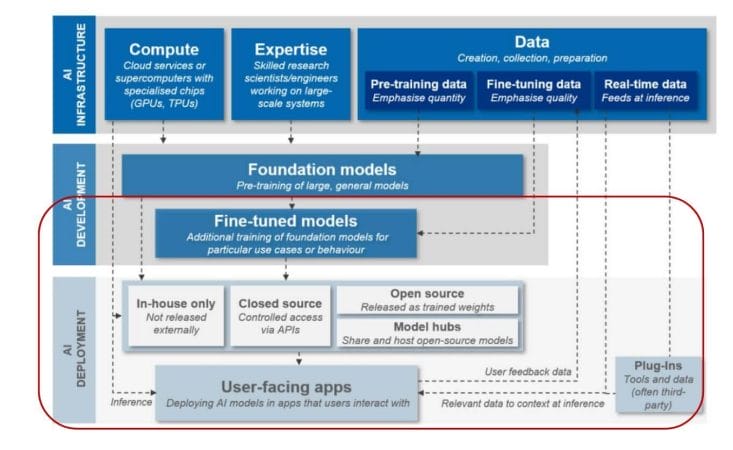

This trend is exemplified by the recent investigation launched by the US Federal Trade Commission on generative AI partnership, and it usually suggests that the market for Foundation Models (FM) may be moving towards a certain degree of consolidation. This potential consolidation in the upstream markets could have negative implications for downstream markets where SMEs and startups operate. These downstream markets are mostly those in the red rectangle in the picture below, extracted from the UK CMA review of AI FM. Less competition in the upstream markets may lead to a decrease in the diversity of business models, and reduce both the degree of flexibility in using multiple FM and the accountability of FM providers for the outputs produced.

An overview of foundation model development, training and deployment

As highlighted by LeCun in his keynote, we need diverse AI systems for the same reason we need diverse press, and for this the role of Open Source is critical.

In this respect, EU policymakers have landed, after heated debates, on a two tiers approach to regulation of FM. The first tier entails a set of transparency obligations and a demonstration of compliance with copyright laws for all FM providers, with the exception of those used only in research or published under an open-source licence.

The exception does not apply for the second tier, which covers instead models classified as having high impact (or carrying systemic risks, art 52a), a classification presumed on the amount of compute used for its training (expressed in floating-point operations, or FLOPs). According to the current text, today only models such as GPT-4 and Meta LLama-2 would find themselves falling into the second tier. While the tiering rationale has been criticised by part of the scientific community, the EU legislators seem to have accepted the proportional approach (distinctly treating different uses and development modalities) advocated by OS ecosystems and the compromise reached is viewed as promising by the OS community.

The broad exemption of free and open-source AI models from the Act, along with the adoption of the proportionality principle for SMEs (art 60), appears to be a reasonable compromise at this stage. The latter principle stipulates that in cases involving modification or fine-tuning of a model, providers’ obligations should be limited to those specific changes. For instance, this could involve updating existing technical documentation to include information on modifications, including new training data sources. This approach could be successful in regulating potential risks associated with AI technology without stifling innovation.

However, as the saying goes, the devil is in the details. The practical implications for the entire AI ecosystem will only become apparent in the coming months or years, especially when the newly established AI Office, tasked with implementing many provisions of the AI Act, begins its work. Among its many responsibilities, the AI Office will also oversee the adjustment of the FLOPs threshold over time to reflect technological and industrial changes.

In the best case scenario, legislative clarity will be achieved in the next months through a flooding of recommendations, guidelines, implementing and delegated acts, codes of conduct (such as the voluntary codes of conduct introduced by art 69 for the application of specific requirements). However, there is concern about the burden this may place on SMEs and startups active in the lower portion of the CMA chart, inundated with paperwork and facing relatively high compliance costs to navigate the new landscape.

The resources that companies like ours will need to allocate to stay abreast of enforcement may detract from other potential contributions to the framework governing AI technology development in the years ahead, such as participation in the standardisation development process. Lastly, a note on a broader yet relatively underdeveloped issue in the legislation: who within the supply chain will be held accountable for damages caused by high-risk AI products or systems? Legal clarity regarding liability is crucial for fostering productive conversations among stakeholders in the AI value chain, particularly between developers and deployers.

Let’s hope that future iterations of the AI regulatory framework will effectively distribute responsibilities among them, ultimately leading to a fair allocation.

Questions to Guide the Reader

What is the significance of the World AI Cannes Festival (WAICF) for AI innovators and decision-makers?

The festival stands as a premier platform for the exhibition and discourse of AI advancements and strategies. Attending this event offers a unique opportunity to delve into cutting-edge applications, connect with key players across the AI value-chain, gain insights into their business strategies, and participate in high-level discussions exploring the evolving intersections of humanity and AI

How does the anticipated AI regulation in Europe impact innovation and the digital market landscape?

The latest version of the AI Act reflects over two years of negotiations involving political and business stakeholders in the field. The inclusion of broad exemptions for free and open-source AI models, coupled with the adoption of the proportionality principle for SMEs, presents a potential avenue for regulating AI technology’s risks without impeding innovation. However, the true impact will only become evident during implementation. Concerns arise regarding compliance costs, particularly for smaller entities, and the lack of legal clarity surrounding liability, which is vital for facilitating constructive dialogues among stakeholders in the AI value chain, particularly between developers and deployers.

What are WordLift’s strategies for aligning with ethical AI practices and upcoming regulations?

Since its inception, WordLift has adopted a proactive approach characterised by a commitment to ethical AI. Building upon this foundation, the company is now actively preparing for regulatory compliance by articulating a comprehensive framework based on four pillars.

How might the consolidation of the market for Foundation Models (FM) affect SMEs and startups in the AI sector?

As larger companies acquire dominance in the market for FMs, SMEs and startups may face greater hurdles in accessing these foundational technologies, potentially leading to increased dependency on them. This could pose a risk of stifling innovation over time. Regulators must closely monitor upstream markets to prevent a reduction in the diversity of business models, ensuring that smaller players retain flexibility in utilising multiple FMs and holding FM providers accountable for the outputs they generate.

The post Navigating the Future of AI Regulation: Insights from the WAICF 2024 appeared first on WordLift Blog.