Table of contents:

- Schemas are everywhere

- Tim Berners-Lee’s and WordLift’s visions changed the way I see schemas and SEO forever

- The debate in the Women in Tech SEO community

- SEO visionaries have a secret advantage

- Indicators as an educated approach

- The pulse of the SEO community

- Schema markup webmaster guidelines

- Schema markup investments

- The past, the present and the future: what do they show us?

- Global Data generated globally and why we’ll deeply drown in it

- Research and reports

- The Why behind structured data: complexity layers

- The model explaining complexity layers is complex too

- The Generative AI Challenge

- Measurable schema markup case studies

- Final words

Schemas Are Everywhere

Schemas are ubiquitous in the data landscape. In the past, data exchanges within and outside companies were relatively straightforward, especially with monolithic architectures. However, the rise of distributed architectures has led to an exponential increase in touch points and, consequently, specifications for data exchanges. This repetition of describing the same data in various languages, formats, and types has resulted in data getting lost and falling out of sync, presenting challenges to data quality.

Schemas serve as foundational elements in data management, offering a fundamental structure that dictates how data is organized and presented. Their extensive use is rooted in their ability to furnish a standardized blueprint for representing and structuring information across diverse domains.

A key function of schemas is to establish a structured framework for data by specifying types, relationships, and constraints. This methodical approach enhances data comprehensibility for both human interpretation and machine processing. Additionally, schemas foster interoperability by cultivating a shared understanding of data structures among different systems and platforms, facilitating smooth data exchange and integration.

In the realm of data operations (dataOps), which centers around automating data-related processes, schemas play a pivotal role in defining the structure of data pipelines. This ensures a seamless flow of data through various operational stages. Concurrently, schemas significantly contribute to data integrity by enforcing rules and constraints, thereby preventing inconsistencies and errors.

The impact of schemas extends to data quality, where they play a crucial role in validating and cleansing incoming data by defining data types, constraints, and relationships. This, in turn, enhances the overall quality of the dataset. Moreover, schemas support controlled changes to data structures over time, enabling adaptations to evolving business needs without disrupting existing data.

In the context of data synchronization, schemas are indispensable for ensuring that data shared across distributed databases adheres to a standardized structure. This minimizes the likelihood of inconsistencies and mismatches when data is exchanged between different sources and destinations.

Beyond their structural role, schemas also function as a form of metadata, offering valuable information about the structure and semantics of the data. Effective metadata management is essential for comprehending, governing, and maintaining the entire data lifecycle.

Tim Berners-Lee’s And Wordlift’s Visions Changed The Way I See Schemas And SEO Forever

Schemas like schema markup truly permeate every aspect of our world. I find their influence fascinating, not just in their inherent power but also in their ability to bring together individuals from diverse backgrounds, cultures, technical setups, and languages to reach the same insights and conclusions through the interoperability they facilitate.

My deeper engagement with Schema.org began after a more profound exploration during my visit to CERN in 2019, courtesy of my partner. While there, I had the privilege of discussing with individuals working at CERN and even had a glimpse of Tim Berners-Lee’s office. For those unfamiliar, Tim is the mind behind the invention of the World Wide Web (WWW). Even though I had previously encountered schema structures in a comprehensive course on Web-based systems, it was during this visit that I truly grasped the broader vision and the trajectory shaping the future of it.

However, this isn’t a narrative about my visit to CERN. I simply want to emphasize the significance of understanding where to direct your attention and how to approach thinking when it comes to recognizing visionaries in the SEO field. Even though Tim Berners-Lee wasn’t specifically contemplating SEO or had any direct connection to it, he, as a computer scientist, aimed to enhance CERN’s internal documentation handling and, in the process, ended up positively transforming the world.

The Debate In The Women In Tech SEO Community

I feel incredibly fortunate to be a part of the Women in Tech SEO community. Areej AbuAli did an outstanding job of bringing together all the brilliant women in the field here. Recently, we had a discussion about the future and necessity of schema markup overall, presenting two contrasting worldviews:

- One perspective suggests that schema markup will diminish over time due to the highly advanced and rapidly improving NLP and NLU technology at Google, which supposedly requires minimal assistance for content understanding.

- The opposing view contends that schema markup is here to stay, backed by Google’s active investments in it.

While I passionately advocated for the latter, I must be transparent and admit that I held the former viewpoint a few years ago. During interviews when questioned about trends in the SEO field, I used to align with the first point. Reflecting on it now, I realize how my perspective has evolved. Was I really blind, or was there a bigger picture? Is there a scientific approach or method that can conclusively settle this debate once and for all?

Let’s agree to disagree, that’s my first goal  Before delving in, I’d like to extend my gratitude to those who have significantly influenced my thought process:

Before delving in, I’d like to extend my gratitude to those who have significantly influenced my thought process:

- Anne Berlin, Brenda Malone, and The Gray Company for providing the initial arguments that ignited my research journey to craft this article.

- A special acknowledgement to Tyler Blankenship (HomeToGo) for sparking the early version of this presentation during our insightful discussion at InHouseSEODay Berlin 2023.

- A big shout-out to the SEO community that actively participated in my poll on X (Twitter).

- And to you, the consumer of this content, I genuinely hope to meet your high expectations and contribute to your further advancement with my research.

SEO Visionaries Have A Secret Advantage

Let me pose my first question to you, dear reader: Can you articulate the present to predict the future?

Time to embrace some open-minded and analytical thinking.

You see, having vision is crucial. Visionary SEO leaders aren’t merely lucky; they’ve mastered the art of analyzing information, learning from history, identifying patterns and leveraging both approaches to predict and anticipate the future. I’ve pondered whether I can find a way to become one myself, especially if I’m not one already.

The reassuring news is that being visionary is a skill that can be cultivated. Let me show you how.

Indicators As An Educated Approach

My time at the Faculty of Computer Science and Engineering – Skopje has been incredibly enlightening in terms of my scientific endeavors. These experiences have equipped me with a framework to approach any problem: leveraging indicators in my analysis.

Well-formulated indicators are not only straightforward to comprehend but also prove to be valuable when formulating initial hypotheses. Now, as I navigate through the process of addressing the pivotal question of whether SEO schema markup is a lasting trend, I’ve outlined the following indicators to guide my thinking:

- The pulse of the SEO community

- Schema markup webmaster guidelines

- Schema markup investments

- Research and reports

- Measurable schema markup case studies

- Complexity layers

- The GenAI challenge

The pulse of the SEO community

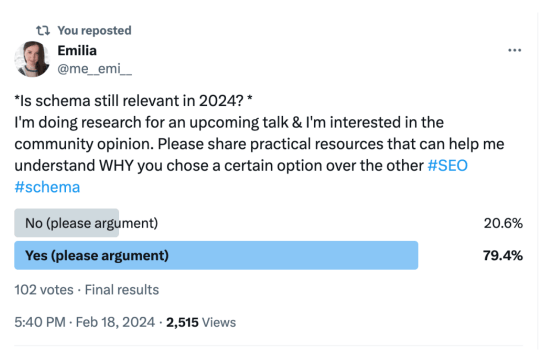

I decided to gauge the pulse by exploring the sentiments within the broader SEO community regarding the topic on X. With a small yet dynamic community centered around my interests, such as technical SEO and content engineering, this presented the perfect chance to gather preliminary insights on a larger scale. The question I posed was: is schema still relevant in 2024? Check out the results below.

Approximately 80% of the votes (totaling 102) leaned towards supporting the continued significance of schema markup in 2024. However, when considering the future beyond that, can we adopt a more scientific approach to ascertain whether structured data via schema markup will stand the test of time or merely be a fleeting trend?

Schema markup webmaster guidelines

The poll was decent, but it’s susceptible to biases, and some may contend that it lacks statistical significance on a global scale, given that I collected just over 100 answers. The community of SEO professionals worldwide is much larger, and I couldn’t ensure that the responses exclusively came from SEO professionals.

This led me to opt for a historical examination of the webmaster guidelines offered by the developer relations teams at two of the largest search engines globally: Google and Bing.

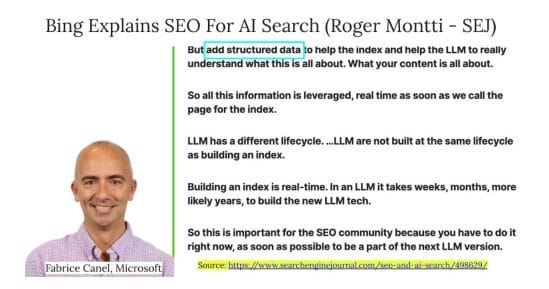

Examining the guidance offered by John Muller and Fabrice Cannel, whether through blog posts or official webmaster documentation, leads us to the conclusion that schema markup remains relevant. A notable piece by Rogger Monti on Search Engine Journal, titled “Bing Explains SEO For AI Search” underscores the significance of adding structured data for content understanding.

See for yourself. If that’s not enough, let’s analyze the historical trendline on schema-related updates on Google’s side: there were 11 positive schema markup updates since August 23rd, 2023 or 13 in total.

Deprecated schema types:

- August 23, 2023: HowTo is removed

- August 23, 2023: FAQ is is removed

New schema types and rich snippets to build your digital passport:

- October 16, 2023: Vehicle structured data listing was added.

- October 2023: Google emphasizes importance of schema on SCL Zurich 2023.

- November 15, 2023: Course info structured data was added

- November 27, 2023: documentations for ProfilePage, DiscussionForum were added along with enhanced guidelines for Q&A page (reliance on SD to develop EEAT).

- November 29, 2023: an update for organization structured data.

- December 4, 2023: Vacation Rental structured data was added.

- January 2024: discount rich results on all devices was launched in the U.S.

- February 2024: structured data support for product variants is added.

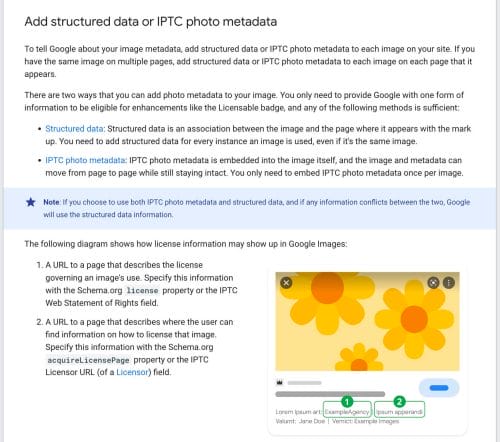

- February 2024: Google urges using metadata for AI-generated images.

- February 2024: Google announces increased support for GS1 on the Global Forum 2024!

- March, 2024: Google announces structured data carousels (beta for Itemlist in combination with other types)

I could get similar insights from Brodie Clark’s SERP features notes. This has never happened before in such a small timeline! Never. Check the Archive tab on Google Search Central blog to analyze independently.

This leads me to the next indicator.

Schema markup investments

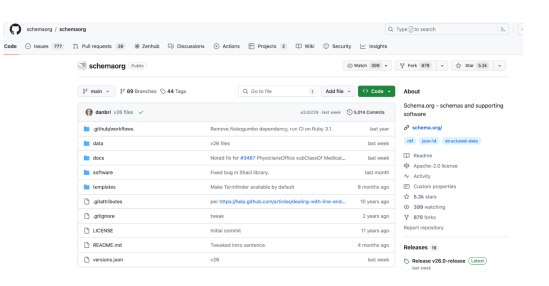

Well, this one is huge: Google just announced increased support for GS1 on the Global Forum 2024! Now, everything falls into place, and the narrative doesn’t end there. For those tracking the schema.org repository managed by Dan Brickley, a Google engineer, it’s evident that the entire Schema.org project is very much active. Schema is here to stay, it’s truly not going anywhere, I thought, but are Google’s or search engines’ investments a reliable indicator to make an informed judgment?

I’ll take on the role of devil’s advocate once more and want to remind you that Google has had its share of failures, including Google Optimize and numerous other projects it heavily invested in. Public relations hype can be deceptive and isn’t always reliable. Unfortunately, schema.org isn’t disclosed in their financial reports, preventing us from gauging how much search engines invest to shape our perspectives. Nevertheless, delving into a more thorough historical analysis can help clarify the picture I’ve just presented to you. Hope you’ll enjoy the ride.

The past, the present and the future: what do they show us?

Let’s start with the mission that Tim Berners-Lee crafted and cultivated at CERN: the findability and interoperability of data. Instead of delving into the intricate details of how he developed the World Wide Web and the entire history leading up to it, let’s focus on the byproducts it generated: Linked Data and the 5-star rating system for data publication on the Web.

Let me clarify this a bit. The essence of the 5-star rating system lies in the idea that we should organize our data in a standardized format and link it using URIs and URLs to provide context on its meaning. The ultimate aim is to offer organized content, or as described in Content Rules, to craft “semantically rich, format-independent, modularized content that can be automatically discovered, reconfigured, and adapted.”

Sounds like a solid plan, right?

Well, only if we had been quicker to adapt our behaviors and more forward-thinking at that time…

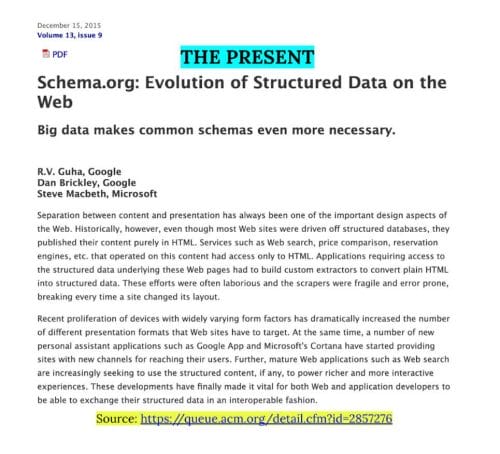

It took humanity more than 20 years to reach a consensus on a common standard for organizing website data in a structured web, and I’m referring to the establishment of Schema.org in 2015. To be more specific, it wasn’t humanity at large but rather the recognition of potential and commercial interest by major tech players such as Google, Microsoft, and others that spurred the development and cultivation of this project for the benefit of everyone.

One quote which is at the beginning of the paper particularly stands out:

“Big data makes common schema even more necessary”

As if it wasn’t already incredibly difficult with all these microservices, data storages, and data layers spanning different organizations, now we have to consider the aspect of big data as well.

Now that I’m familiar with the past and the present, can I truly catch a glimpse into the future? What does the future hold for us, enthusiasts of linked data? Will our aspirations be acknowledged at all? I’ll answer this in the upcoming sections.

Global data generated globally and why we’ll deeply drown in it

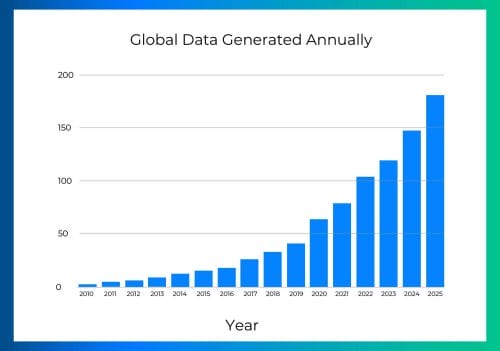

The authors were confident that big data is rendering common schemas even more crucial. But just how massive is the data, and how rapidly is it growing year after year?

As per Exploding Topics, a platform for trend analysis, a staggering 120 zettabytes are generated annually, with a notable statement noting that “90% of the world’s data was generated in the last two years alone.” That’s an exceptionally large volume of data skyrocketing at a lightning-fast pace! In mathematical terms, that’s exponential growth but it doesn’t even stop there: we need to factor generative AI in too. Oh. My. God. Good luck in estimating that.

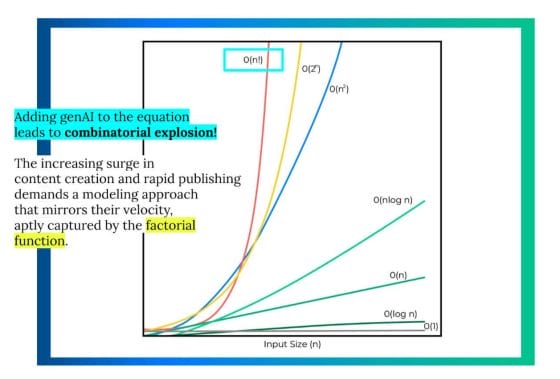

Incorporating generative AI and its impact on generating even more data on the web with minimal investment in time and money results in a combinatorial explosion!

The escalating wave of content creation and swift publishing necessitates a modeling approach that aligns with their velocity, effectively captured by the factorial function. The exponential function is no longer sufficient – factorial comes closest to modeling our reality at this scale.

Exponential growth: but we need to factor in the GenAI impact too!

We’re making significant strides in grasping the significance of schema implementation, but one could argue that Google now boasts a BERT-like setup with advancements like MUM, Gemini, and more. It’s truly at the forefront of natural language understanding. This provides a valid point for debate.

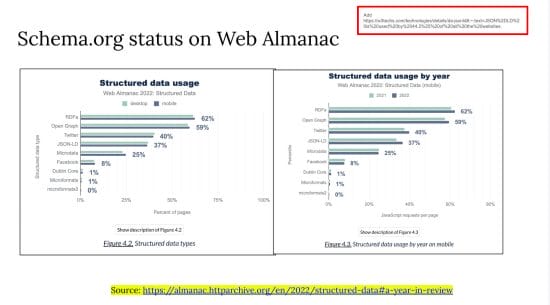

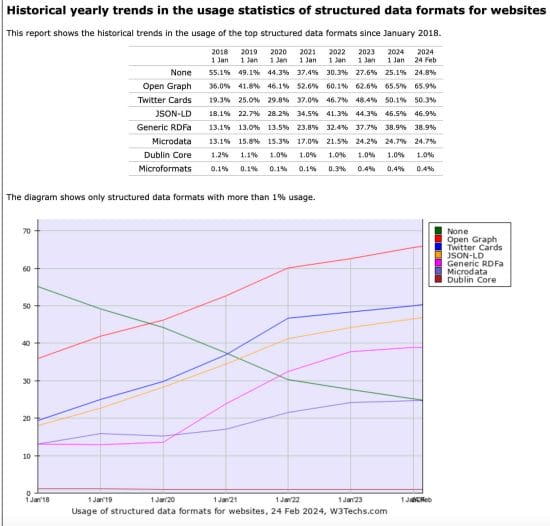

I need to delve into a more critical analysis to uncover better supporting evidence: are there statistics that illustrate the growth of schema usage over time and indicate where it is headed?

Let’s continue to the next indicator.

Research and reports

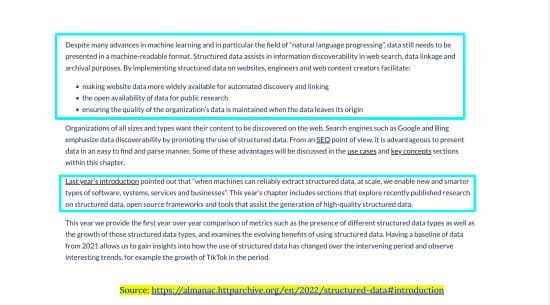

The initial thought that crossed my mind was to delve into the insights provided on The Web Almanac website. Specifically, I focused on the section about Structured Data, and it’s worth noting that the latest report available is from 2022. I’ve included screenshots below for your reference.

The key point to highlight is encapsulated in the following quote:

“Despite numerous advancements in machine learning, especially in the realm of natural language processing, it remains imperative

to present data in a format that is machine-readable.”

Here, you’ll find additional insights on the status and growth of structured data in the upcoming figures, sourced from W3Techs – World Wide Web Technology Surveys:

Examine the figures. Schema is showing a distinct upward trend, which is promising – I’ve finally come across something more backable. Now I can confirm through data that schema is likely here to say.

The next question for you, dear reader, is how to establish a tangible connection between schema and a measurable business use case?

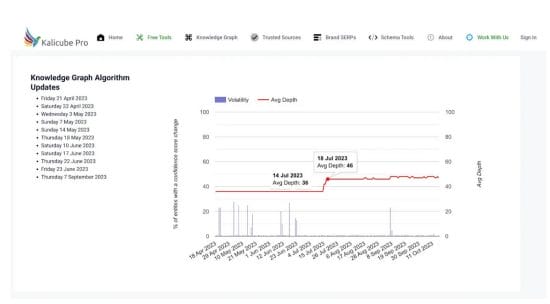

Enter the “Killer Whale” update. Fortunately, I have a personal connection with Jason Barnard and closely follow his work. I instantly remembered the E-E-A-T Knowledge Graph 2023 update he discussed in Search Engine Land. For those unfamiliar, Jason has created his own database where he monitors thousands of entities, knowledge panels, and SERP behavior, where all of them are super important for Google’s natural language understanding capabilities.

Why is this significant? Well, knowledge panels reflect Google’s explicit comprehension of named entities or the robustness of its data understanding capabilities. That is why Jason’s ultimate objective was to quantify volatility using Kalicube’s Knowledge Graph Sensor(created in 2015).

As Jason puts it, “Google’s SERPs were remarkably volatile on those days, too – for the first time ever.” Check out the dates below.

The Google Knowledge Graph is undergoing significant changes, and all the insights shared in his SEJ article distinctly highlight that Google is methodically evolving its knowledge graph. The focus is on diversifying points of reference, with a particular emphasis on decreasing dependence on Wikidata. Why would someone do that?

When considering structured data, Wikidata is the first association that comes to mind, at least for me. The decision to phase out Wikidata references indicates Google’s confidence in the current state of its knowledge graph. I confidently speculate that Google aims to depend more on its proprietary technology, reducing reliance and establishing a protective barrier around its data. Another driving force behind this initiative is the growing significance of structured formats. Google encourages businesses to integrate more structured, findable information, be it through their Google Business profiles, Merchant Centers, or schema markup. I’ll tweak the original quote and confidently assert that:

“Findabledata, not just data,

is the new oil in the world”

There are numerous reasons for this, leading me to the sixth indicator: complexity layers. This is where it becomes genuinely fascinating. Please bear with me.

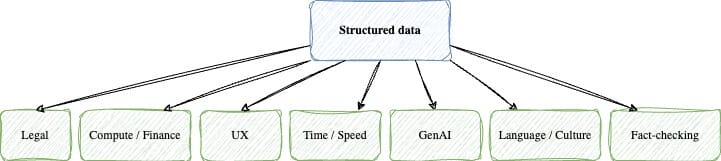

The Why behind structured data: complexity layers

Structured data holds significant importance for various reasons. Utilizing structured data with schema markup facilitates the generation, cleansing, unification, and merging of real-world layers – not just data layers – that play a crucial role in mindful; data curation and dataOps within companies.

I call them complexity layers.

I kind of touched on complexity layers when I discussed data growth year over year but let’s take a deeper dive now.

Compute

Tyler, if you happen to come across this article, I’d like to extend my appreciation for your thought-provoking questions and the insightful discussion we had in Berlin. The entire discussion on complexity layers in this section is inspired by our conversation and originates from the notes I took during that session.

The capacity of compute is directly tied to the available financial resources and the expertise in optimizing data collection strategies and Extract-Transform-Load (ETL) processes. Google, being financially strong, can invest in substantial compute power. Furthermore, their highly skilled engineers possess the expertise to mathematically and algorithmically optimize workflows for processing larger volumes of data efficiently.

However, even for Google, compute resources are not limitless, especially considering the rapid growth of data they need to contend with (as discussed in the “Global Data generated globally and why we’ll deeply drown in it” section). The only sustainable approach for them to handle critical data at scale is to establish and enforce unified data standardization and data publishing wherever feasible.

Sound familiar? Well, that’s precisely what structured data through schema markup brings to the table.

Content generated by AI, if not refined through processes like rewriting or fact-checking, has the potential to significantly degrade the quality of search engines such as Google and Bing. The importance of quality assurance processes has never been more important than it is now. Hence, navigating compute resource management becomes especially challenging in the age of generative AI. The key to mitigating data complexity lies in leveraging structured data, such as schema markup. Therefore, I’ll modify Zdenko Vrandecic’s original quote ““In a world of infinite content, knowledge becomes valuable” to the following:

“In a world of infinite [AI-generated] content,

[reliable] knowledge becomes valuable”

Even the impressive Amsive team, led by the fantastic Lily Ray, wrote about this, describing the handling of unstructured data as a crucial aspect of AI readiness: “this lack of structure puts a burden on large language models (LLMs) developers to provide the missing structure, and to help their tools and systems continue to grow. As LLMs and AI-powered tools seek real-time information, it’s likely they will rely on signals like search engines for determining source trustworthiness, accuracy, and reliability”. This brings me to several new indicators that I will delve into, but for now, let’s focus on the legal aspect.

Legal

Organizing unstructured data, especially when dealing with Personally Identifiable Information (PII), demands compliance with data protection laws like GDPR and local regulations. Essential considerations encompass securing consent, implementing anonymization or pseudonymization, ensuring data security, practicing data minimization, upholding transparency, and seeking legal guidance. Adhering to these measures is crucial to steer clear of legal repercussions, including fines and damage to one’s reputation. While fines can be paid, repairing reputational damage is a tougher challenge.

Following the rules of data protection laws is crucial, even for big tech players who, by law, must secure explicit consent before delving into individuals’ data. They also need to explore methods like anonymization and pseudonymization to mitigate risks and fortify their data defenses through encryption and access controls.

This often leads major tech companies to actively collaborate with legal experts or data protection officers, ensuring their data practices stay in sync with the ever-changing legal landscape. Can you guess how structured data through schema markup helps address these challenges?

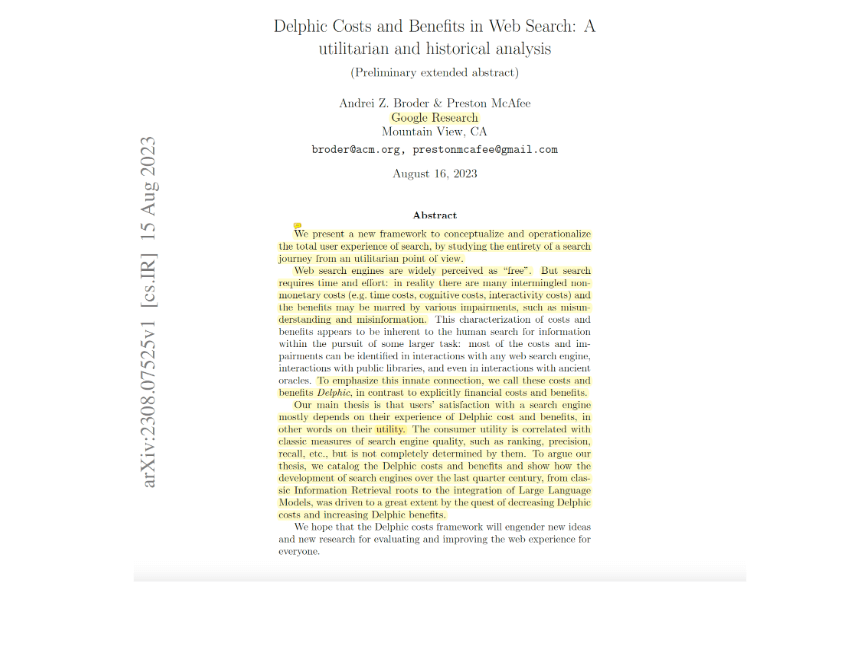

User experience (UX)

One of the trickiest scenarios for employing structured data. You see, user experience doesn’t just hinge on the computer cost but is also influenced by non-monetary factors like time, cognitive effort, and interactivity. Navigating the web demands cognitive investments and instruments, such as time for exploration and effort to comprehend the data and user interfaces presented.

These intricacies are well-explored in the Google Research’s paper titled “Delphic Costs and Benefits in Web Search: A Utilitarian and Historical Analysis.”

To cite the authors, “we call these costs and benefits Delphic, in contrast to explicitly financial costs and benefits…Our main thesis is that users’ satisfaction with a search engine mostly depends on their experience of Delphic cost and benefits, in other words on their utility. The consumer utility is correlated with classic measures of search engine quality, such as ranking, precision, recall, etc., but is not completely determined by them…”.

The authors identify many intermingled costs to search:

- Access costs: for a suitable device and internet bandwidth

- Cognitive costs: to formulate and reformulate the query, parse the search result page, choose relevant results, etc.

- Interactivity costs: to type, scroll, view, click, listen, and so on

- Waiting costs: for results and processing them, time costs to task completion.

How about minimizing the costs of accessing information online by standardizing data formats, facilitating swift and scalable information retrieval through the use of schema markup?

Time / Speed

This leads me to the next indicator, time to information (TTI).

Time to information typically refers to the duration or elapsed time it takes to retrieve, access, or obtain the desired information. It is a metric used to measure the efficiency and speed of accessing relevant data or content, particularly in the context of information retrieval systems, search engines, or data processing. The goal is often to minimize the time it takes for users or systems to obtain the information they are seeking, contributing to a more efficient and satisfactory user experience. While not easily quantifiable, this aspect holds immense significance, especially in domains where the timely acquisition of critical information within a short interval is make or break.

Consider scenarios like limited-time offers on e-commerce and coupon platforms or real-time updates on protests, natural disasters, sports results, or any time-sensitive information. Even with top-tier cloud and data infrastructure, such as what Google boasts, the challenge lies in ensuring the prompt retrieval of the most up-to-date data. APIs are a potential solution, but they entail complex collaborations and partnerships between companies. Another avenue, which doesn’t involve legal and financial intricacies, is exerting pressure on website owners to structure and publish their data in a standardized manner.

Again, we need structured data like schema markup.

Generative AI

Do you see why I’m labeling them as complexity layers? Well, if they weren’t intricate enough already, you have to consider the added layer of complexity introduced by generative AI. As I’m crafting this section, I’m recognizing that it’s sufficiently intricate to warrant a separate discussion. I trust that you, dear reader, will reach the same conclusion and stay with me until I lay out all the facts before diving into the narrative for this section. If you want to jump directly to it, search for The Generative AI challenge section that will come later in this post.

Geography

Oh, this one’s a tricky one, and I name it the geo-alignment problem.

Dealing with complexities like UX, compute, legal, and the rest is complex enough, but now we’ve got to grapple with geographical considerations too.

Let me illustrate with a simple example using McDonald’s: no matter where you are in the world, the logo remains consistent and recognizable, and the core offerings are more or less the same (with some minor differences between regions). It serves as a universal symbol for affordable and quickly prepared fast food. Whether you’re in Italy, the U.S., or Thailand, you always know what McDonald’s represents.

The challenge here is that McDonald’s is just one business, one entity. We have billions of entities that we need to perceive in a consistent manner, not just in how we visually perceive them but also in how we consume them. This ensures that individuals from different corners of the globe can share a consistent experience, a mutual understanding of entities and actions when conducting online searches. Now, throw in the language barrier, and it becomes clear – schema markup is essential to standardize and streamline this process.

Fact-checking

Hmm, I believe I’ll include this in the Generative AI challenge section as well. Please bear with me

The model describing complexity layers is complex too

These layers aren’t isolated from each other, nor are they neatly stacked in an intuitive manner. If I were to visualize it for you, you might expect something like the following image: you tackle compute, then integrate legal, solve that too, and move on to UX, and so forth. Makes sense, right?

Well, not exactly. These layers are intertwined, tangled, and follow an unpredictable trajectory. They form a part of a complex network system, making it extremely challenging to anticipate obstacles. Something more like this:

Now, remember the combinatorial explosion? Good luck in dealing with that on top of complexity layers without using structured schema markup data in search engines. Like Perplexity.ai co-founder and CTO Denis Yarats says:

“I think Google is one of the

most complicated systems humanity has ever built.

In terms of complexity, it’s probably even beyond flying to the moon”

The Generative AI Challenge

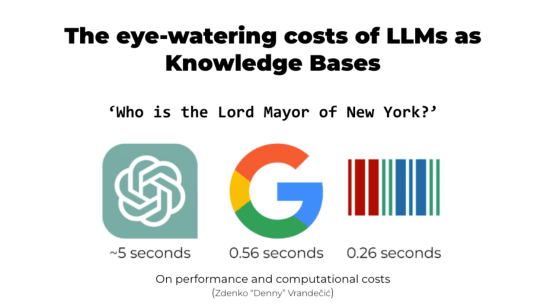

I’ve written several blog posts about generative AI, large language models (LLMs) for SEO and Google Search on this blog. However, until now, I didn’t have concrete numbers to illustrate the amount of time it takes to obtain information when prompting them.

Retrieving information is inherently challenging by design

I came across an analysis by Zdenko Danny Vrancedic that highlights the time costs associated with getting an answer to the same question from ChatGPT, Google, and Wikidata: “Who is the Lord Mayor of New York?”

In the initial comparison, as Denny suggests, ChatGPT runs on top-tier hardware that’s available for purchase. In the second scenario, Google utilizes the most expensive hardware that isn’t accessible to the public. Meanwhile, in the third case, Wikidata operates on a single, average server. It raises the question of how someone running a single server can deliver the answer more quickly than both Google and OpenAI? How is this even remotely possible?

LLMs face this issue because they lack real-time information and lack a solid grounding in knowledge graphs, hindering their ability to swiftly access such data. What’s more concerning is the likelihood of them generating inaccurate responses. The key statement for LLMs here is that they need to be retrained more often to provide up-to-date and correct information unless they use RAG and GraphRAG more specifically.

On the other hand, Google encounters this challenge due to its reliance on information retrieval processes tied to page ranking. In contrast, Wikidata doesn’t grapple with these issues. It efficiently organizes data in a graph-like database, storing facts and retrieving them promptly as needed.

In conclusion, when executed correctly, generative AI proves to be incredibly useful and intriguing. However, the task of swiftly obtaining accurate and current information remains a challenge without the use of structured data like schema markup. And the challenges don’t end there.

How text-to-video models can contaminate the online data space

Enter SORA, OpenAI’s latest text-to-video model. While the democratization of video production is undoubtedly positive, consider the implications of SORA for misinformation in unverified and non-professionally edited content. It has the potential to evolve into a new form of negative SEO. I delve deeper into this subject in my earlier article, “The Future Of Video SEO is Schema Markup. Here’s Why.“

Now, let’s discuss future model training. My essential question for you, dear reader, is: what labeling strategies can you use to effectively differentiate synthetic or AI-generated data, thereby avoiding its unintentional incorporation in upcoming LLM model training?

Researchers from Stanford, MIT, and the Center for Research and Teaching in Economics in Mexico endeavored to address this issue in their research work “What label should be applied to content produced by generative AI?”. While they successfully identified two labels that are widely comprehensible to the public across five countries, it remains to be seen which labeling scheme ensures consistent interpretation of the label worldwide. The complete excerpt is provided below:

“…we found that AI generated, Generated with an AI tool, and AI Manipulated are the terms that participants most consistently associated with content that was generated using AI. However, if the goal is to identify content that is misleading (e.g., our second research question), these terms perform quite poorly. Instead, Deepfake and Manipulated are the terms most consistently associated with content that is potentially misleading. The differences between AI Manipulated and Manipulated are quite striking: simply adding the “AI” qualifier dramatically changed which pieces of content participants understood the term as applying it….

This demonstrates our participants’ sensitivity to – and in general correct understanding of – the phrase “AI.” In answer to our forth research question, it is important from a generalizability perspective, as well as a practical perspective, that our findings appeared to be fairly consistent across our samples recruited from the United States,Mexico, Brazil, India, and China. Given the global nature of technology companies (and the global impact of generative AI), it is imperative that any labeling scheme ensure that the label is interpreted in a consistent manner around the world.”

And it does not even stop there! The world recently learned about EMO: a groundbreaking AI model by Alibaba that creates expressive portrait videos from just an image and audio. Like Dogan Ural reportedly shares, “..EMO captures subtle facial expressions & head movements, creating lifelike talking & singing videos…Unlike traditional methods, EMO uses a direct audio-to-video approach, ditching 3D models & landmarks. This means smoother transitions & more natural expressions…”. The full info can be found in his thread on X, while more technical details are discussed in Alibaba’s research paper.

Source: Dogan Ural on X

Google has even begun advising webmasters globally to include more metadata in photos. I anticipate that videos will follow, especially in light of the recent announcement of SORA. Clearly, schema markup emerges as the solution to tackle the challenges across all these use cases.

Measurable Schema Markup Case Studies

We’re grateful for everyone, either SEOs or entrepreneurs like Inlinks, the Schema.app, Schemantra and others, who push the boundaries of what’s possible and verifiable in the field of SEO through schema markup, fact-checking and advanced content engineering.

We especially take great pride in the WordLift team, which consistently implements technical marketing strategies using schema markups and knowledge graphs for clients’ websites, making a measurable impact in the process. We continually innovate and strive to make the process of knowledge sharing more accessible to the community. One such initiative is our SEO Case Studies corner on the WordLift blog, providing you with the opportunity to get a sneak peek into our thinking, tools, and clients’ results.

The conclusion? Schema markup is here to stay, big time.

Final Words

It’s been quite a journey, and I appreciate you being with us. I sincerely hope it was worthwhile, and you gained some new insights today. Conducting this study was no easy task, but with the support of an innovative, research-backed team, anything is possible!

Structured data with schema markup is firmly entrenched and all signs point definitively in that direction. I trust this article will influence your perspective and motivate you to proactively prepare for the future.

Ready to elevate your SEO strategy with the power of schema markup and ensure your data quality is top-notch? Let’s make your content future-proof together. Talk to our team today and discover how to transform your digital presence.

The post Schema Markup Is Here To Stay. Here’s The Evidence. appeared first on WordLift Blog.